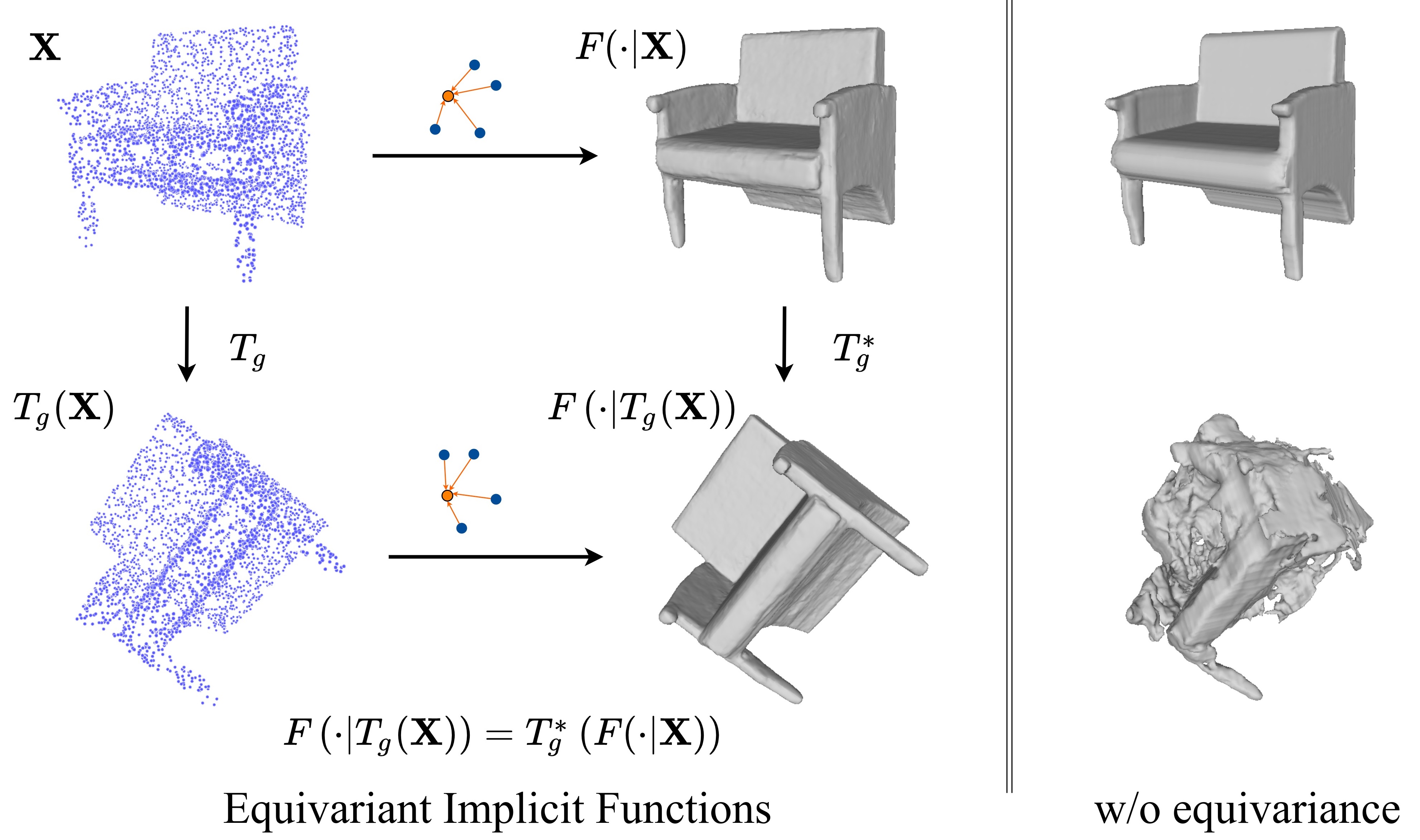

Method

Our equivariant graph implicit function infers the implicit field for a 3D shape, given a sparse point cloud observation. When a transformation (rotation, translation, or/and scaling) is applied to the observation, the resulting implicit field is guaranteed to be the same as applying a corresponding transformation to the inferred implicit field from the untransformed input (middle). The property of equivariance enables generalization to unseen transformations, under which existing models often struggle (right).

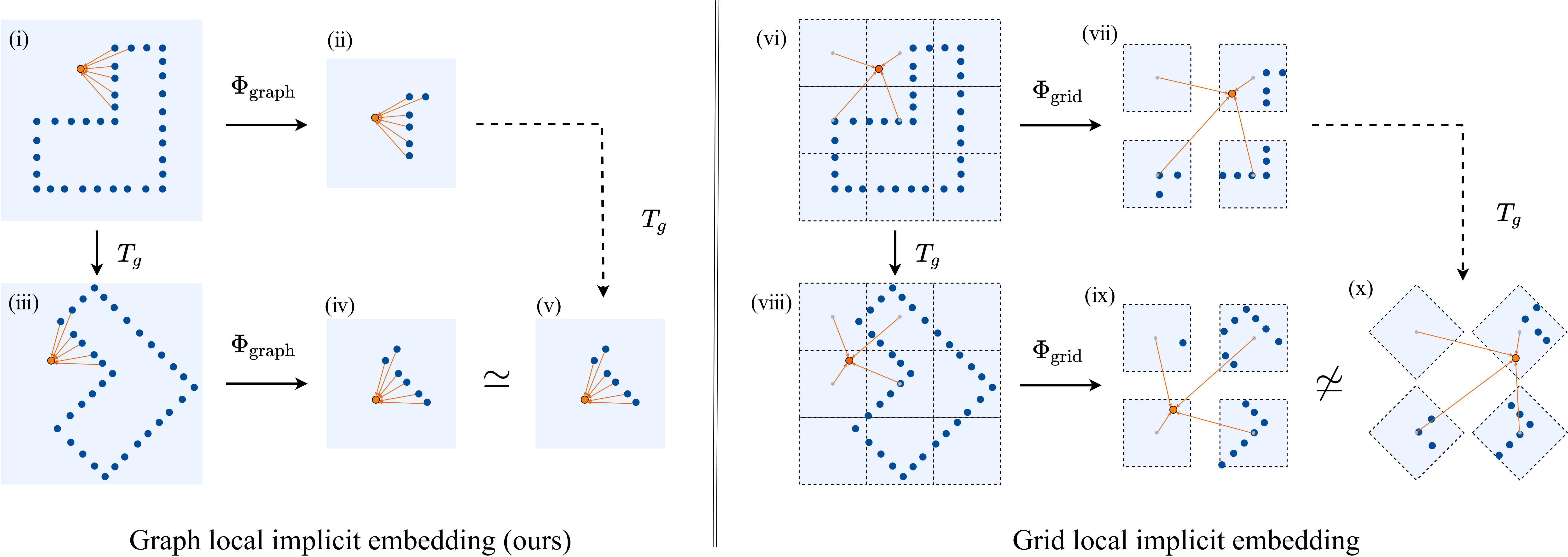

Graph-structured local implicit feature embedding

To achieve high fidelity 3D reconstruction in local details, we embed implicit function in local k-NN graphs. The architecture is robust to similarity geometric transformations, while existing local implicit embedding methods based on convolutional grid structure are sensitive to these transformations.

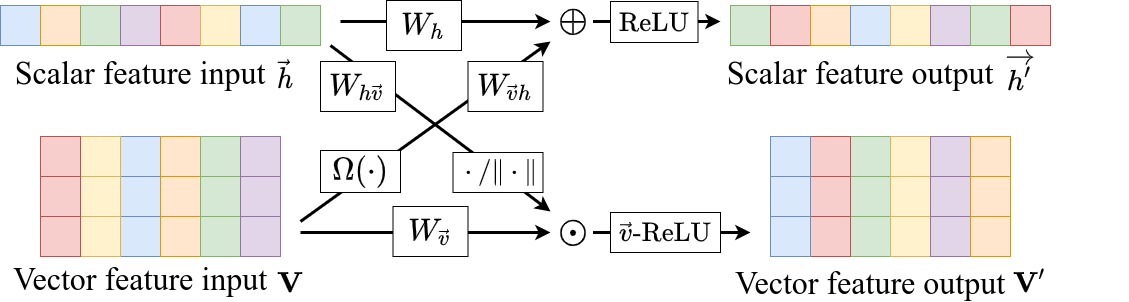

Equivariant graph convolution layers

We incorporate equivariant layer design with hybrid scalar and vector features for graph convolution layers, which facilitates numeric robustness against geometric transformations. The equivariant mechanism was adapted from Vector Neurons [Deng et al. ICCV 2021] and EGNN [Satorras et al. ICML 2021].